Agentic Ops: When to Trust an AI and When to Trap It

Oct 24, 2025

Agentic Ops: When to Trust an AI and When to Trap It

Agentic AI can read, decide, and act. That power compounds value only when the system is designed to be reliable, observable, and controllable by operators, not just data teams. The question is not whether to automate. It is which decisions to automate, under what conditions, with what guardrails, and with what evidence that the outcome is better, cheaper, or faster without increasing risk.

Why this matters now

Models are stronger, interfaces are simpler, and unit economics improve as automation scales. At the same time, error costs, privacy requirements, and cyber exposure increase. Trust must be engineered. That means defined roles, decision gates, fallbacks, logs, and measurable accuracy and impact.

Target outcomes

Higher decision throughput with lower cycle time. Fewer handoffs and less rework. Accuracy above a defined threshold for each use case. Secure data handling with rapid rollback if behavior drifts. A clean audit trail that a buyer, lender, or regulator can follow.

A practical capability ladder

Level 0 Assist: AI drafts, human decides and acts.

Level 1 Recommend: AI recommends with confidence score, human approves.

Level 2 Co pilot: AI acts inside guardrails for low risk decisions, human reviews later.

Level 3 Agentic: AI acts end to end, escalates only on thresholds.

Progress up the ladder only when objective thresholds are met for accuracy, latency, and control coverage.

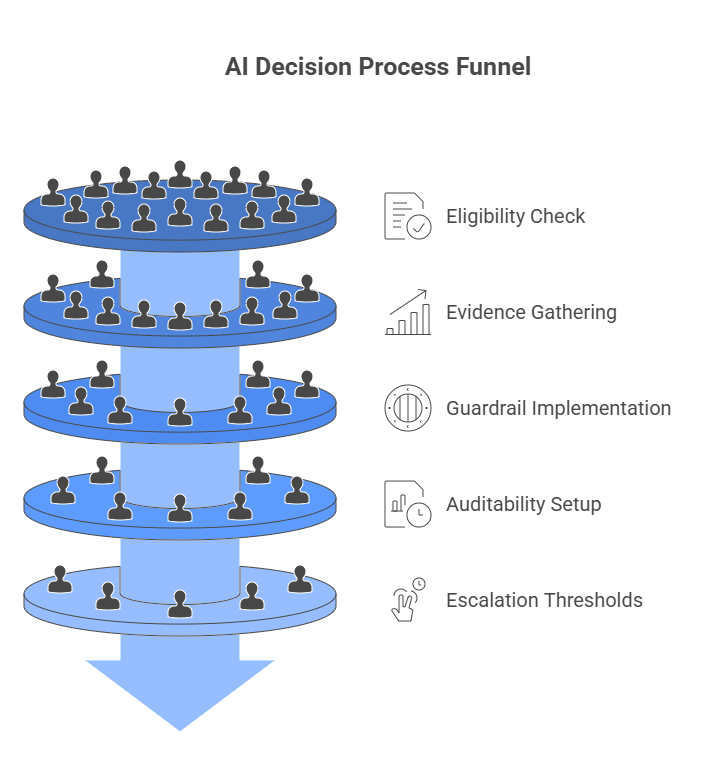

Decision gates that prevent value destruction

Gate 1 Eligibility: the use case must be low criticality or high tolerance for error at the start, with well defined success labels.

Gate 2 Evidence: a baseline accuracy and cost per decision, plus a benchmark after a controlled pilot.

Gate 3 Guardrails: input filters, policy constraints, rate limits, and a simple kill switch with owner and paging.

Gate 4 Auditability: every action must capture the input, the model version, the features used, the confidence score, and the output with a timestamp.

Gate 5 Escalation: clear thresholds that route to a human, with a path to learn from escalations.

Control patterns that work

Human in the loop for edge cases.

Dual control for sensitive actions such as price changes outside band, data deletion, payment initiation.

Time boxing for long running agents to avoid runaway actions.

Sandboxed connectors to critical systems with read first, then write with constraints.

A prompt firewall that injects policy and redacts sensitive fields before the model sees them.

Use case selection that pays

Start where decisions are frequent and outcomes are observable within days. Examples include pricing override suggestions, AR dispute triage, ticket routing and auto summaries, vendor risk screening, and email to case conversion. Avoid unbounded creative tasks or ambiguous policies until the playbook matures.

Evidence standard

Define the exact metric that matters for each case. Accuracy for classification. Lift in conversion or collection for pricing and AR. Cycle time and first contact resolution for service. Error rate for fulfillment steps. Report both the outcome and the operating cost per decision. Store the before and after for at least three months.

Risk taxonomy and mitigations

Model error risk: measure false positive and false negative separately and set asymmetric thresholds if costs are asymmetric.

Data risk: minimize scope, encrypt data in transit and at rest, restrict tokens, rotate keys.

Operational risk: watch queue backlogs and cycle time, add rate limits, and define cold paths when the model fails.

Security risk: isolate agents, enforce role based access, and treat connectors as critical code.

Reputation risk: filter outputs for prohibited content and bias, and maintain an incident playbook for rapid retraction.

Roles and responsibilities

Business owner defines the decision, the value, and the tolerance for error.

CAIO defines standards, model choices, monitoring, and drift detection.

CIO secures connectors, identity, and data flow.

CFO validates the cost and value case, and the control that protects covenants and headroom if the agent fails.

Operating Partner coaches adoption, enforces cadence, and integrates outcomes into dashboards and routines.

Metrics that matter

Decision accuracy against a labeled truth set.

Cycle time from input to action.

Percent of cases handled fully by agent, with quality above threshold.

Escalation rate and resolution cycle time.

Incident count, time to detection, and time to rollback.

Cost per decision compared to baseline.

Buyer grade measure: percent of KPIs with reproducible lineage and archived artifacts.

Control table example

| Use case | Level | Guardrails | Threshold to escalate | Evidence kept | Owner |

|---|---|---|---|---|---|

| AR dispute triage | 2 | allowed labels, PII redaction, rate limit | confidence below 0.78 or value above set amount | input, label, model version, output, decision time | AR lead |

| Pricing override suggestion | 1 | price bands, customer tier rules | outside band or customer decile 1 | CPQ export, band rules, suggestion and outcome | CRO ops |

| Vendor risk screening | 2 | source whitelist, policy prompts | conflicting signals or missing docs | source snapshot, features, score, action | Procurement |

| Ticket routing and summary | 3 | PII mask, profanity filter | sentiment negative or VIP account | transcript, summary, route, SLA clock | Support ops |

Operating rhythm

Daily agent health checks with green yellow red and top incidents.

Weekly review of accuracy, escalations, and cost per decision with owners and actions.

Monthly review of drift and retraining needs, with archived snapshots.

Quarterly audit of access, logs, and evidence packs.

Common failure modes

Deploying at Level 3 without a truth set and a working Level 1.

No kill switch or unclear owner.

Agents with write access before read only behavior is studied.

Prompt sprawl with inconsistent policies and no prompt firewall.

Dashboards without dated artifacts that a buyer or regulator can reproduce.

Exit back angle

Package evidence that the agentic system is real and safe. Include accuracy panels, lineage scripts, access inventories, incident drill logs, and cost per decision before and after. Buyers pay for reliable automation that survives turnover and scales without surprises.

VCII Note and Copyright

VCII’s TVC Next treats agentic AI as an operating system. Decisions move up the capability ladder only when evidence meets the threshold and controls are proven in routine. Copyright © 2025 VCII, Meritrium Corp. All rights reserved.

We have many great affordable courses waiting for you!

Stay connected with news and updates!

Join our mailing list to receive the latest news and updates from our team.

Don't worry, your information will not be shared.

We hate SPAM. We will never sell your information, for any reason.