No AI Allowed? Private Equity Confidentiality Risk in the Age of Generative AI

Jan 12, 2026

Generative AI has changed the confidentiality equation in private markets. Data rooms were built for controlled access, limited copying, and auditability. AI tools compress, synthesize, and repackage sensitive materials at scale, often outside traditional governance. The result is a sharp rise in NDA and VDR clauses that prohibit uploading confidential information into AI systems that may retain, expose, or train on that data.

This is not anti-innovation. It is a rational response to three realities:

-

AI increases replication risk: a few pasted paragraphs can generate memos, models, or summaries that travel farther than the original documents.

-

Tool sprawl is outpacing governance: plugins, copilots, note takers, browser assistants, and unsanctioned usage create shadow pathways.

-

Accountability sits with the GP and the LP: regulators, LP ops teams, and fund counsel increasingly expect explicit controls, not informal “common sense.”

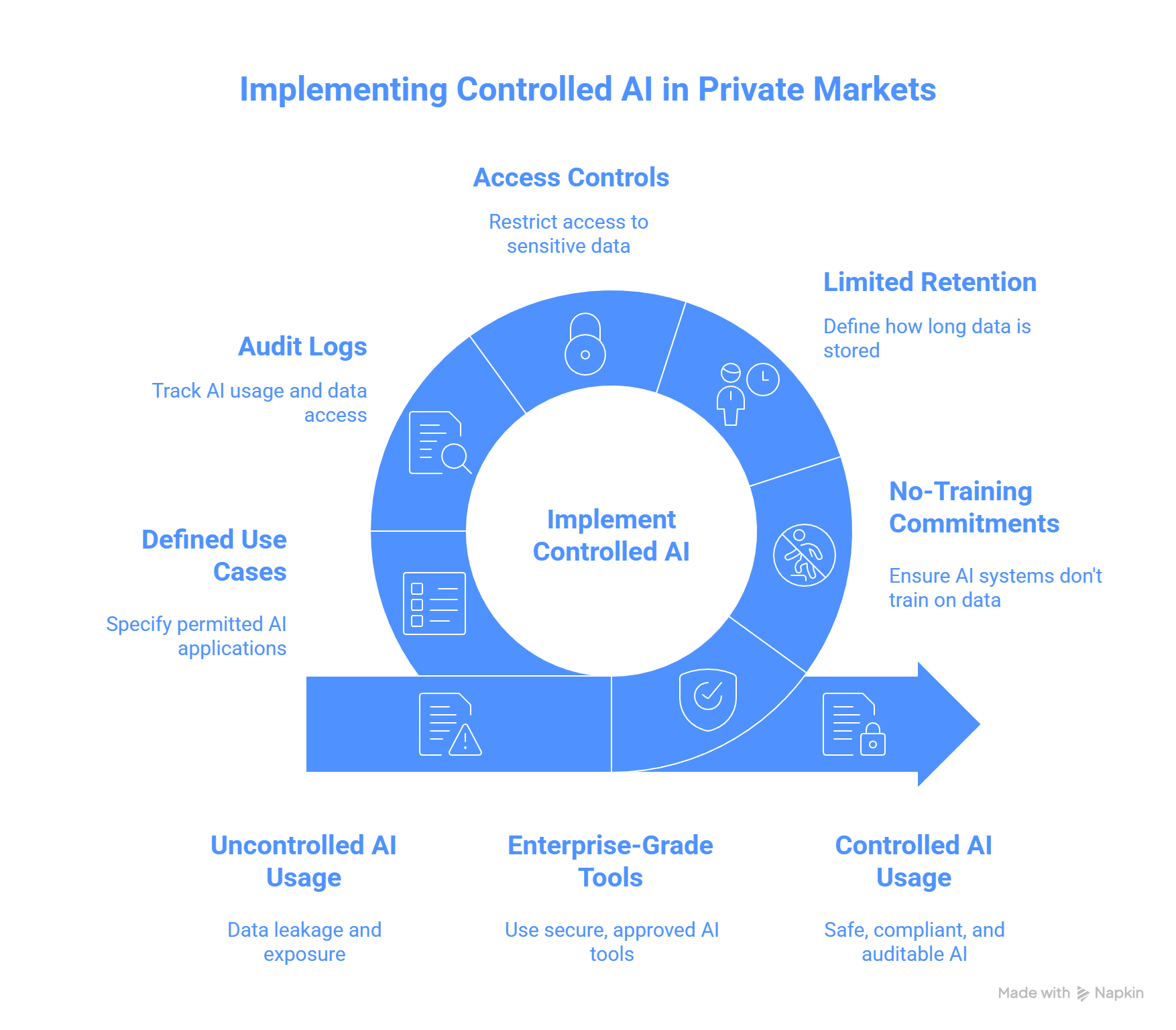

VCII view: blanket bans are a blunt but understandable interim step. The market is moving toward Controlled AI, meaning enterprise-grade tools, explicit no-training commitments, limited retention, access controls, audit logs, and defined permitted use cases. The winner is not the firm that bans AI. The winner is the firm that can prove it uses AI safely.

1) What PE firms are trying to protect

PE firms do not fear AI. They fear loss of control over their edge.

Confidentiality assets inside a typical data room

| Category | Examples | Why it matters |

|---|---|---|

| Deal edge | investment thesis, IC notes, diligence memos | exposes underwriting logic and sourcing advantage |

| Pricing power | price lists, discount rules, churn and cohort data | reveals value capture mechanics and market leverage |

| Operational reality | KPI packs, capacity constraints, sales productivity | shows what is broken and where the upside sits |

| Legal and regulatory | contracts, disputes, IP, compliance | creates litigation and reputational exposure |

| People data | comp bands, org charts, performance notes | privacy exposure, employee claims, HR leakage |

| Fund mechanics | fee terms, side letters, fund docs | negotiating leverage and fundraising sensitivity |

Core risk statement: AI can convert a controlled VDR into an uncontrolled derivative content factory.

2) What AI can do in diligence workflows (practically, not hypothetically)

The issue is not “chat.” The issue is compression + inference + output propagation.

Capability risk map

| AI capability | What it enables | Why it creates risk |

|---|---|---|

| Summarization at scale | rapid review of hundreds of docs | faster review increases copy-paste behavior |

| Pattern extraction | flags hidden signals in churn, pricing, costs | reconstructs sensitive strategy from scattered inputs |

| Artifact generation | memos, models, checklists, board packs | outputs travel and are hard to trace back to sources |

| Context stitching | combines fragments into cohesive narratives | small disclosures become full reconstructions |

Even if the AI tool does not “train,” retention, logging, human review, plugins, and user behavior can still create exposure.

3) The real leakage paths (why clauses look “over strict”)

Most NDAs now aim at multiple leak vectors, not one.

Leakage vectors that matter in private markets

| Leak vector | How it happens | The GP concern |

|---|---|---|

| Training reuse | consumer tools may use inputs for improvement unless contractually excluded | “our data becomes someone else’s asset” |

| Retention and review | prompts and outputs are stored, logged, or reviewed | “more humans touched the data” |

| Third-party tooling | plugins, connectors, meeting bots, browser extensions | “data leaves the perimeter silently” |

| Shadow AI | staff paste content into unapproved tools | “policy says no, behavior says yes” |

| Output leakage | AI outputs circulate as “clean summaries” | “the derivative spreads faster than the original” |

| Vendor chain risk | sub-processors and hosting layers | “who else has access, and where is it stored?” |

Why this is hard: data rooms were designed to control documents. AI changes the risk surface to include prompts, outputs, logs, plugins, and personal accounts.

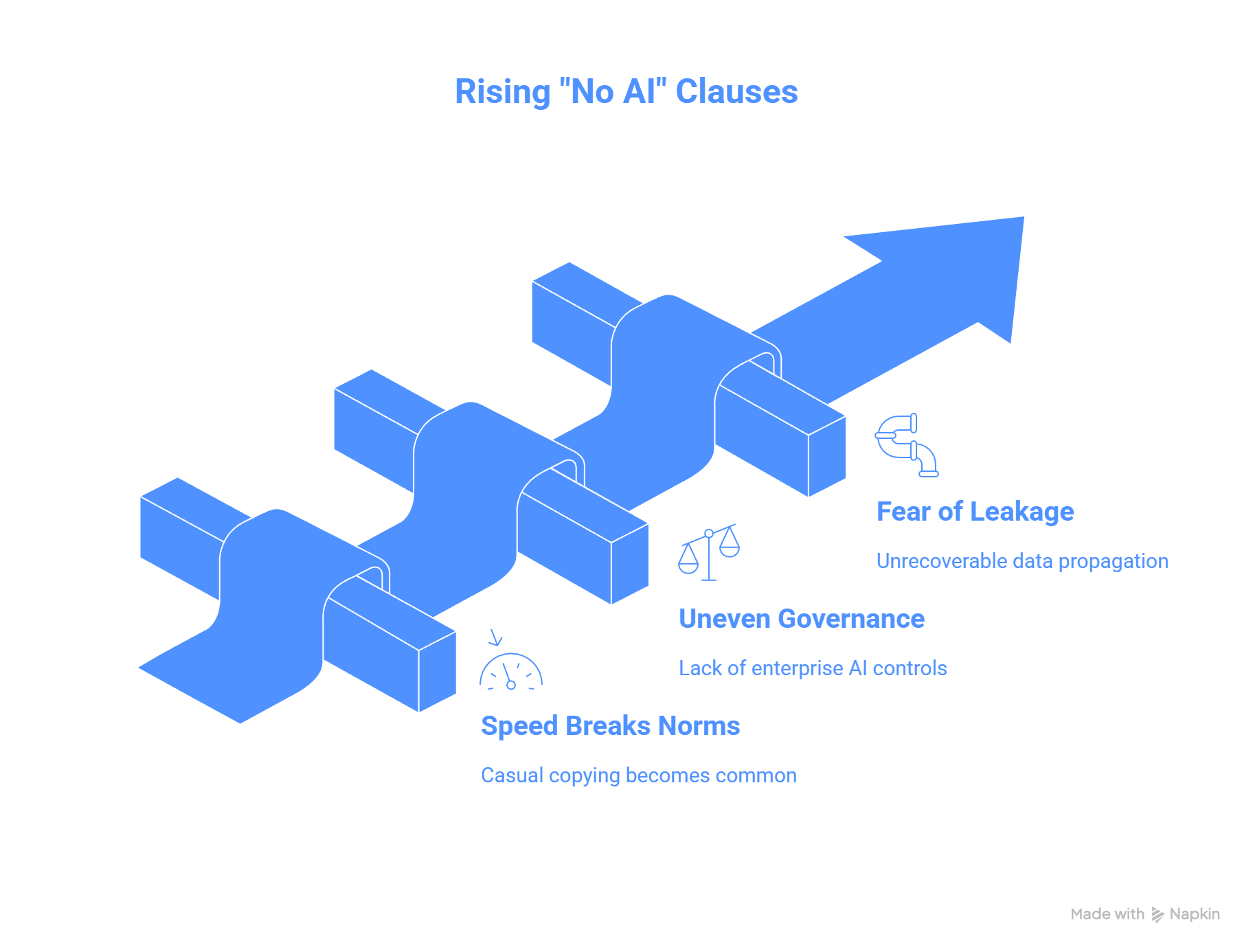

4) Why “no AI” clauses are rising now

Three forces are accelerating adoption of restrictive language:

-

Speed breaks norms

When analysis becomes instantaneous, copying becomes casual. -

Governance maturity is uneven

Some teams have enterprise AI, logging, and controls. Many do not. NDAs default to the lowest common denominator. -

Fear of irreversible leakage

A document leak is painful. A leak that trains or propagates through multiple systems feels unrecoverable, even if that fear is sometimes overstated.

Your statistic pack (use as directional signals):

-

“50%+ of PE firms implementing AI policies” and “high shadow AI usage in finance” are plausible directional indicators for why legal teams are acting quickly. Treat them as trend markers unless you have primary sources for each number.

5) Is this overboard? Sometimes. Is it rational? Often.

When a full prohibition is defensible

-

VDR content includes customer economics, pricing, contracts, debt terms, and litigation materials.

-

The receiving party cannot demonstrate enterprise controls.

-

The GP has prior exposure incidents or heightened regulatory sensitivity.

When it becomes counterproductive

-

Routine screening and public-market research.

-

First-pass DDQ analysis of sanitized material.

-

Template drafting that does not require confidential inputs.

The solution is not binary. It is data classification + tool certification + monitoring.

6) The Controlled AI model (the emerging middle ground)

A “Controlled AI” clause allows AI use only if strict conditions are met.

Controlled AI requirements (minimum viable set)

| Control | What “good” looks like |

|---|---|

| No training commitment | written vendor commitment that your data is not used to train models |

| Retention limits | defined retention windows, deletion support, minimal logging |

| Access controls | SSO, RBAC, least privilege, no personal accounts |

| No external connectors | plugins and connectors disabled unless approved |

| Auditability | logs, export controls, incident traceability |

| Approved use cases | clear list of permitted tasks and prohibited tasks |

| Data zoning | green, amber, red data classification with matching rules |

| Vendor due diligence | sub-processor disclosure, hosting location, security posture |

| Output governance | guidance on where outputs can be stored and shared |

| Training and enforcement | mandatory training, spot checks, clear consequences |

VCII 3-zone diligence workflow (simple, enforceable)

| Zone | Data type | AI rule |

|---|---|---|

| Green | public info, websites, news, market reports | AI allowed |

| Amber | sanitized DDQs, redacted excerpts, non-sensitive summaries | AI allowed in approved enterprise tools only |

| Red | VDR docs, contracts, customer data, KPI packs, LPAs, pricing files | no AI unless Controlled AI environment is contractually approved |

This model lets firms move fast where risk is low and slow where risk is existential.

7) A practical negotiation menu (for allocators and advisors)

If you receive an AI prohibition clause, three reasonable responses exist:

-

Accept

Use AI on Green material only. Keep Red content strictly out of models. -

Carve-out

Propose Controlled AI with explicit requirements (no training, retention limits, audit logs, no plugins). -

Redaction pathway

Permit AI only on extracts that are sanitized inside the perimeter and approved for Amber use.

This keeps the GP protected while allowing modern workflows.

8) The hard truth: shadow AI is the primary operational risk

Most confidentiality failures are not malicious. They are convenience-driven:

-

Analyst pastes a clause to “summarize quickly.”

-

Associate pastes a KPI pack to “draft a board update.”

-

Output is emailed, copied into a memo, stored in a shared folder.

-

The derivative spreads, and control is lost quietly.

A ban may reduce this, but only temporarily. The durable fix is governed enablement: approved tools, training, and monitoring.

Conclusion: the new standard is “trust architecture”

The market is moving toward a clear equilibrium:

-

AI is allowed for low sensitivity work

-

AI is restricted for high sensitivity work

-

AI is permitted for sensitive work only when controls are provable

The NDA clause you saw is a signal. It says: “We do not trust your tooling, your governance, or your staff behavior yet.”

The strategic response is to build an environment that deserves trust, then codify it contractually.

VCII 2026. Copyrighted Material.

We have many great affordable courses waiting for you!

Stay connected with news and updates!

Join our mailing list to receive the latest news and updates from our team.

Don't worry, your information will not be shared.

We hate SPAM. We will never sell your information, for any reason.