The Ghost in the Machine: When Not to Summon AI in Private Equity

Sep 17, 2025

A hard-nosed field guide for saying no to AI until it can actually move EBITDA, cash, or customer behavior

Everyone is leaning into AI. That does not make every AI initiative smart. In a market where buyers pay for auditable improvements and time is expensive, the winning posture is selective aggression. Use AI where it can convert quickly into cash saved, cash earned, or risk reduced. Say no everywhere else. The discipline is not fear. It is focus.

Below is a practical playbook for when to press pause, what tests to run before you fund a use case, and how to avoid AI theatre in portfolio companies.

The If Test: start with value, not voltage

Before you touch a tool, answer one question: if this works, what line on the P and L improves within one or two quarters. Name the line, the owner, the unit of change, and the measurement cadence. If you cannot do that in plain language on a single page, you are not ready to buy anything.

One Page, One Owner, One Number

-

One page that ties the use case to a business objective and a P and L line

-

One accountable owner with decision authority in the workflow

-

One number that defines success and will be reviewed weekly

If you cannot meet this standard, do not start.

Do not use AI when strategy is fuzzy

AI cannot rescue an unclear strategy. It will automate the confusion.

Pause if

-

The value creation plan is still a wish list, not a shortlist

-

You cannot point to two or three levers where AI could plausibly sharpen demand, price, throughput, support, compliance, or working capital

-

Your board materials describe AI in slogans rather than in the language of cash, customers, and capacity

Fix first

Clarify the few moves that matter this year. Align leaders on those moves. Only then pick AI that accelerates them, not distracts from them. High performers make AI a servant to strategy, not the star of the deck.

Do not use AI when ROI is unmeasured

Activity is not progress. Heatmaps are not results. If you cannot show the dollar, you are not ready.

Pause if

-

Success metrics read like aspirations: better, faster, smarter

-

The business case promises benefits far in the future with no early proof points

-

You are in pilot purgatory with no scale path or stop rule

Fix first

Write a two-stage case: near-term, auditable wins in 90 days, and a longer horizon if the first stage pays. Add kill criteria and a date. Escaping pilot purgatory requires defined outcomes, an owner, and a path from test to production, not endless exploration.

Do not use AI without adoption by the humans who carry the work

The hardest part is not the model. It is the people. Tools that teams will not use are shelfware.

Pause if

-

The target users were not in the room when the use case was scoped

-

Incentives do not change with the new way of working

-

Training is a slide deck instead of supervised reps on live work

-

Executives talk AI but do not touch it themselves

Fix first

Run a small, live workflow with the actual team. Measure time saved, error avoided, or revenue gained. Reward adoption directly. Hands-on leadership matters. Governance bodies emphasize this because adoption risk is often higher than technical risk.

Do not use AI when risks are not owned

AI carries real risk: privacy, bias, model drift, brittleness, cost overrun, vendor lock-in. If you cannot name who owns each risk, you are not ready.

Pause if

-

No one is accountable for data lineage and access control

-

You have not defined how models will be evaluated, monitored, and retrained

-

There is no plan for failure modes, including manual fallback and customer communication

Fix first

Adopt a simple risk checklist from recognized frameworks and make it operational. For each risk, name the owner, control, test, and evidence. Align board oversight with this list. AI risk management is an operating discipline, not a legal paragraph.

Do not use AI if it adds complexity faster than clarity

Complexity kills execution. Do not turn a one-page plan into a 60-page system.

Pause if

-

The solution introduces new tools, new data flows, and new steps without removing any old ones

-

Nontechnical leaders cannot explain how the workflow changes on Monday

-

Reporting grows while decisions do not get faster

Fix first

Remove steps as you add capability. Require before and after swimlanes for every use case. If the Monday morning behavior does not change, you do not have a solution. Boards that steer performance, not presentations, enforce this rule.

Do not use AI to chase novelty

Hype is not a business case. Agent labels, autonomous claims, and demos can lure you into expensive experiments.

Pause if

-

The vendor sells a category name rather than an outcome

-

Your team cannot run a low-tech version of the same logic today

-

You are leaning on AI where a rules engine or process fix would suffice

Fix first

Run the simplest test that could work. In fast-moving areas like so-called agentic AI, market noise is high and true maturity is uneven. Reserve capital for repeatable tasks with clear economics.

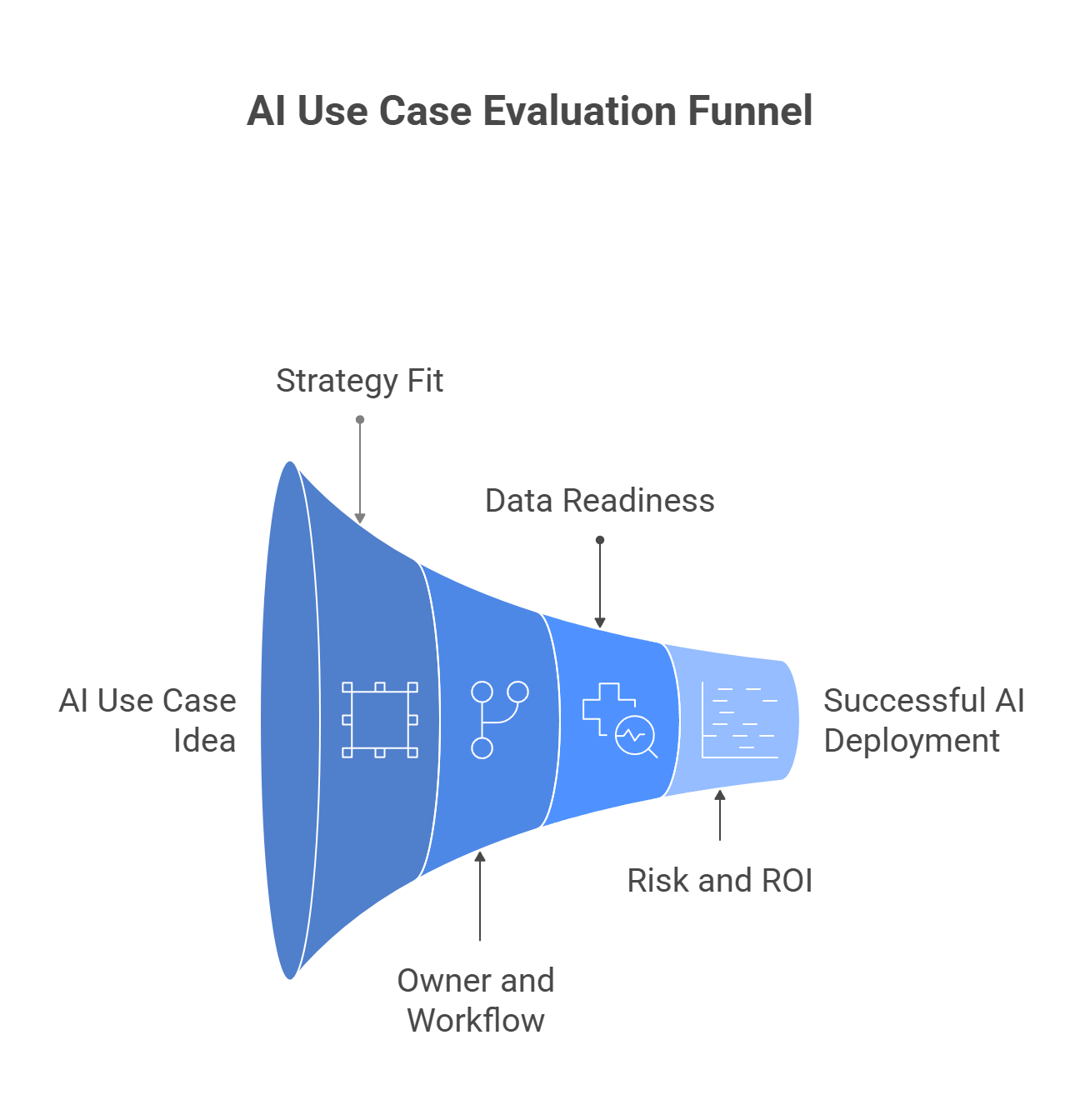

Four gates every PE-backed AI use case must pass

Treat AI like capital. Make it earn the right to deploy.

Gate 1: Strategy fit

Name the lever and the buyer signal it improves.

Gate 2: Owner and workflow

Who owns it and where does it live in the process. No owner, no project.

Gate 3: Data readiness

What data, from where, at what quality. What is the plan to remediate gaps.

Gate 4: Risk and ROI

What can go wrong, how it is monitored, how money is made or saved. Include kill criteria.

If a use case fails any gate, rework it or stop.

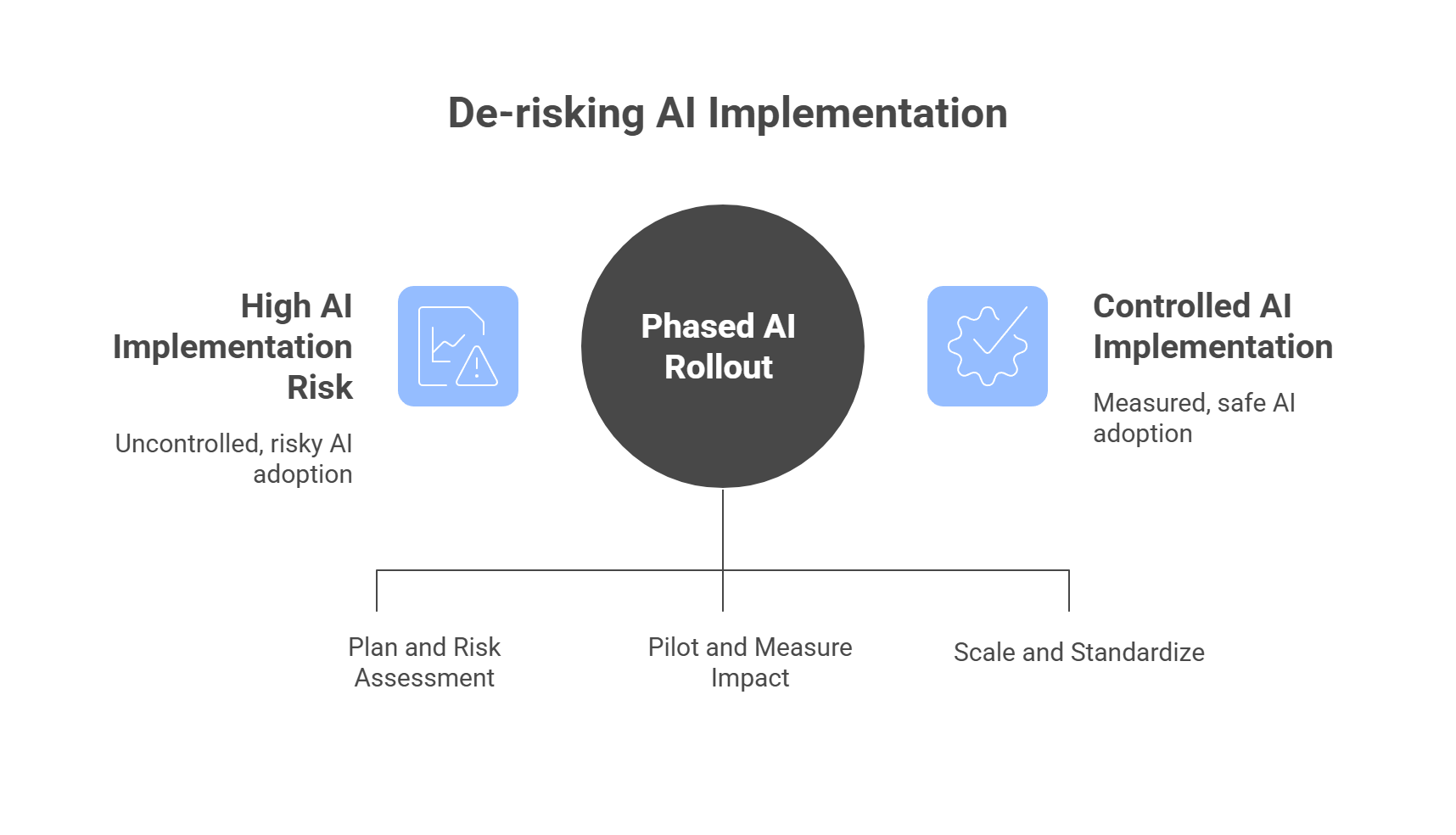

A one-week, one-month, one-quarter plan to de-risk AI

Week 1

-

Write the one-page case with owner, KPI, and kill switch

-

Map the current workflow and the intended change

-

Run a tabletop risk review using a recognized checklist

Month 1

-

Pilot in one team with live work

-

Publish weekly deltas on time saved, errors avoided, revenue gained

-

Remove at least one legacy step from the process

Quarter 1

-

Scale to more teams only if KPI lifts persist

-

Put the new way into standard work and training

-

Report to the board using the same KPI and risk format you started with

If the lift stalls or risks rise, stop, learn, and reallocate.

A short list of AI use cases worth a second look

Focus on boring, bankable motion where the economics are obvious.

-

Forecasting demand to reduce stockouts and working capital

-

Dynamic price guidance that improves realization without discounting

-

Assisted service to shorten handle time and raise first contact resolution

-

Collections prioritization to cut DSO and bad debt

-

Content automation for repetitive, high-volume communication

Each of these has a measurable line of sight to cash, margin, or cycle time. Start there. Expand only after wins are real.

Bottom line

Summoning AI is not the point. Delivering outcomes is. In a discipline built on underwriting, treat AI the same way you treat debt, equity, and time. Deploy it when it predictably improves the business and the customer. Decline it when it crowds the calendar, bloats the process, or hides behind theater. The ghost in the machine is not magic. It is leverage. Use it like a professional.

Copyright © 2025 VCI Institute. All rights reserved.

References and source notes

NIST AI Risk Management Framework 1.0 and companion guidance for generative AI, used here to anchor risk identification, ownership, and controls. NIST Publications+2NIST Publications+2

McKinsey State of AI 2024 and 2025 surveys, referenced for the link between strategy fit, high performers, and measured value rather than exploratory hype. McKinsey & Company+1

Gartner Hype Cycle commentary on generative AI entering a trough of disillusionment, underscoring the need to prove ROI before scaling. Gartner+2Forbes+2

CIO and related analyses on breaking AI pilot purgatory and scaling beyond experiments, informing the kill criteria and cadence recommendations. CIO+1

NACD guidance on board technology oversight and AI governance, supporting the call for board-aligned metrics and adoption accountability. NACD+2NACD+2

Reuters reporting on agentic AI hype and project cancellations, reinforcing caution against novelty-driven initiatives without economics. Reuters

We have many great affordable courses waiting for you!

Stay connected with news and updates!

Join our mailing list to receive the latest news and updates from our team.

Don't worry, your information will not be shared.

We hate SPAM. We will never sell your information, for any reason.